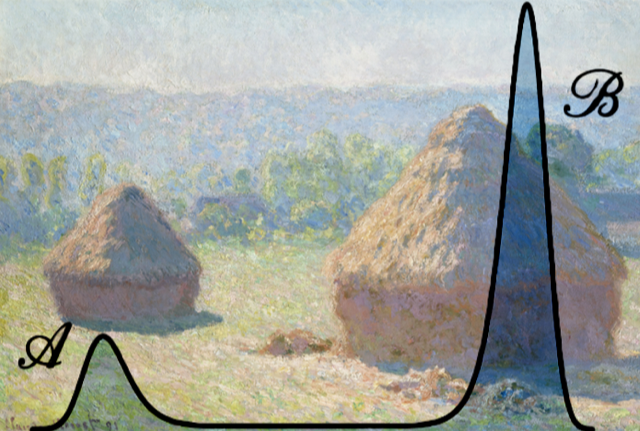

Probabilistic sampling for physics: finding needles in a field of high-dimensional haystacks

Institut Pascal

This 3-week meeting aims at gathering physicists and mathematicians working on stochastic sampling. The goal is to foster new interdisciplinary collaborations to address challenges encountered while sampling probability distributions presenting high-energy barriers and/or a large number of metastable states.

The challenges of metastability / slow mixing are faced by researchers working in a diverse range of fields in science, from fundamental problems in statistical mechanics to large-scale simulations of materials; in recent years significant progress has been made on several aspects, including :

- design of Markov processes which break free from a random-walk behavior

- numerical methods for dimensionality reduction of input data

- mode-hopping non-local moves by generative models,

- density region detection,

- analytical derivations of robustness guarantees.

By bringing together established experts and early-career researchers across a wide range of disciplines we aim to find new synergies to answer important and general questions, including:

- How to associate fastest local mixing strategies with mode-hopping moves?

- What physics can we preserve and extract along the dynamics?

- How to build reduced representations that can detect previously unseen events or regions

A focus will be made on implementing and comparing algorithms via benchmark datasets and models.

Week 1: Plenary talks covering a diverse range of open problems in different theoretical and numerical aspects of stochastic sampling. Flash talks from all participants. Brainstorming sessions.

Week 2: Presentations from leading and early-career researchers. Introduction of benchmark problems and implementation in Python code.

Week 3: Presentations from leading and early-career researchers. Collaboration and discussion with benchmark problems.

The three week program will feature mornings of plenary and research talks, with afternoons largely allocated for discussion and collaboration.

To promote long-term and interdisciplinary collaborations, a focus of the afternoon sessions will be both the design and, importantly, implementation of benchmark problems for stochastic sampling. This will allow the relative strengths of available methods to be assessed in detail, and provide a concrete framework for collaborations. The final week will focus on using these benchmark problems to guide discussion on combining advances from different fields. The achieved work (original results and review articles) will be featured as a publication in Cahiers de l'Institut Pascal, published by EDP Sciences.

Colloquia, open to a broader general public, will be given by:

- Stéphane Mallat, ENS Paris (Wednesday Sept, 6th - 10 AM)

- Freddy Bouchet, ENS Paris (Thursday Sept, 14th - 3.30 PM)

- Alberto Rosso, Uni. Paris-Saclay (Wednesday Sept, 20th - 3.30 PM)

Plenary talks will be given by:

- David Aristoff, Colorado State

- Mihai-Cosmin Marinica, CEA

- Alain Durmus, École Polytechnique

- Virginie Ehrlacher, Ecole des Ponts ParisTech

- Marylou Gabrie, École Polytechnique

- Tim Garoni, Monash Uni.

- Pierre Jacob, ESSEC

- Tony Lelievre, Ecole des Ponts ParisTech

- Manon Michel, CNRS - Uni. Clermont-Auvergne

- Pierre Monmarché, Sorbonne Université

- Feliks Nüske, Max Planck Institute DCTS Magdeburg

- Misaki Ozawa, CNRS & Univ. Grenoble Alpes

- Danny Perez, Los Alamos National Laboratory

- Grant Rotskoff, Stanford University

- Beatriz Seoane, LISN, Univ. Paris-Saclay

- Gabriel Stoltz, Ecole des Ponts ParisTech

- Thomas Swinburne, CNRS, Uni. Aix-Marseille

- Marija Vucelja, University of Virginia

- Martin Weigel, Chemnitz University

Research talks will be given by:

- Michael Albergo, New York Uni.

- Haochuan Chen, Uni. de Lorraine

- Aurélien Decelle, Complutense University of Madrid

- Jorge Fernandez de Cossio Diaz, ENS Paris

- Federico Ghimenti, Uni. de Paris Cité

- Tristan Guyon, CNRS, Uni. Clermont-Auvergne

- Léon Huet, Sorbonne Uni.

- Eiji Kawasaki, CEA

- Mouad Ramil, Seoul Uni.

- Vincent Souveton, CNRS, Uni. Clermont-Auvergne

We look forward to seeing you in Paris!

Manon Michel, CNRS & Univ. Clermont Auvergne

Thomas Swinburne, CNRS & Univ. Aix-Marseille

Scientific committee: Alain Durmus (École Polytechnique), Virginie Ehrlacher (École des Ponts), Guilhem Lavaux (IAP), Mihai-Cosmin Marinica (CEA), Martin Weigel (Chemnitz Uni)